Drone Hover Without GPS

Project Rundown

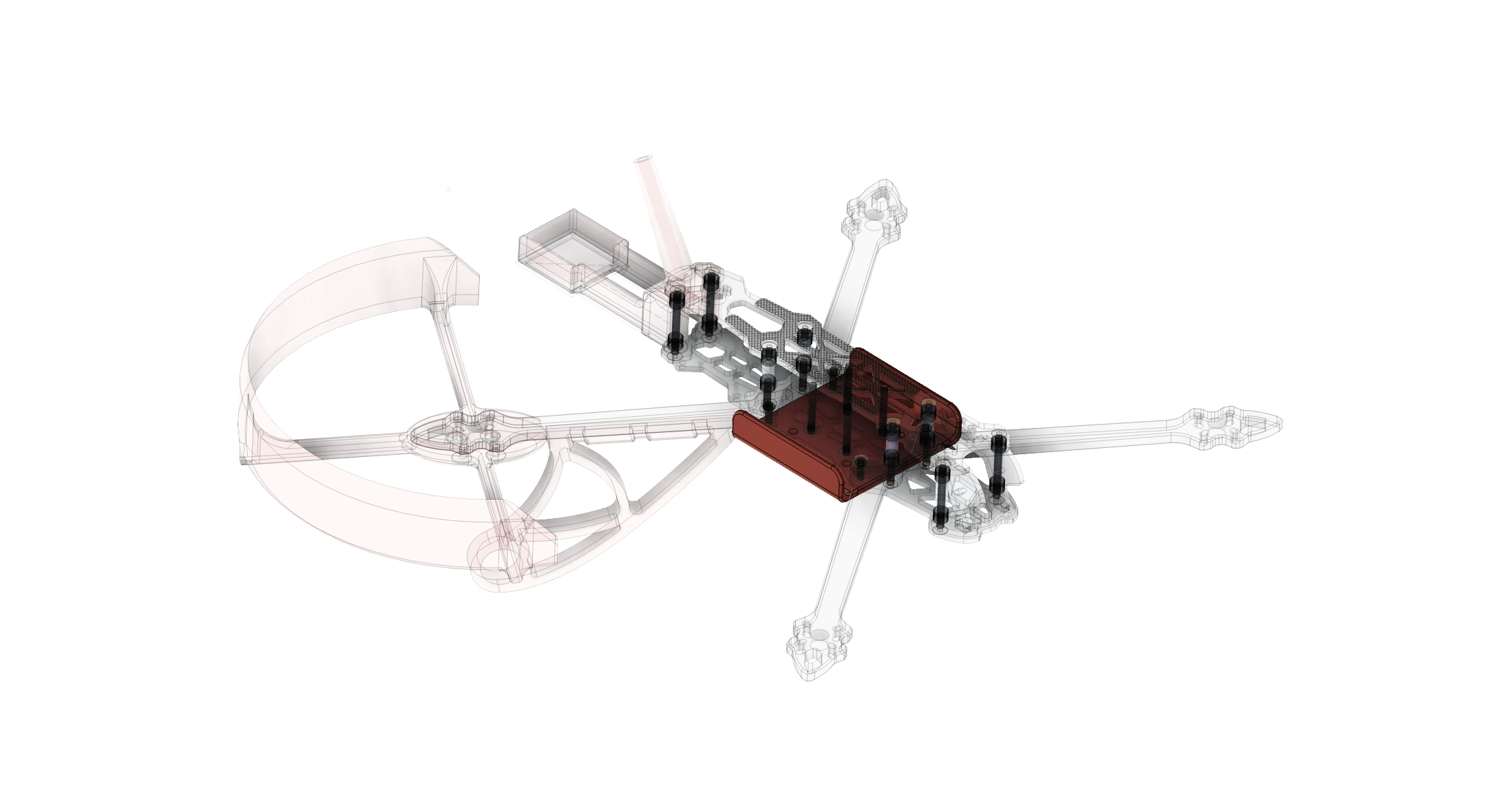

Our system enables UAVs to maintain stable hovering by relying on advanced positioning technologies such as visual odometry, LIDAR, and sensor fusion. These methods work together to accurately assess position and ensure steady flight, even in GPS-denied environments. Optical flow technology further enhances adaptability, allowing drones to navigate complex surroundings without external signals.

This innovation is particularly valuable for warehouse inspections, construction site monitoring, urban infrastructure evaluations, and disaster response missions. It also supports drone-based communication relays and tactical operations. With precision motion tracking, obstacle detection, and AI-powered drift correction, the technology integrates seamlessly into existing UAV systems, ensuring reliable performance in critical operations.

Technical Specifications

- Effective Range: 20m - 100 m

- Processing Latency: 20 ms

- Communication: MAVLink protocol

- Navigation Algorithms: Advanced visual odometry combined with sensor fusion

- Sensor Fusion: Integrates real-time data from onboard cameras, IMUs, barometers, magnetometers, and gyroscopes

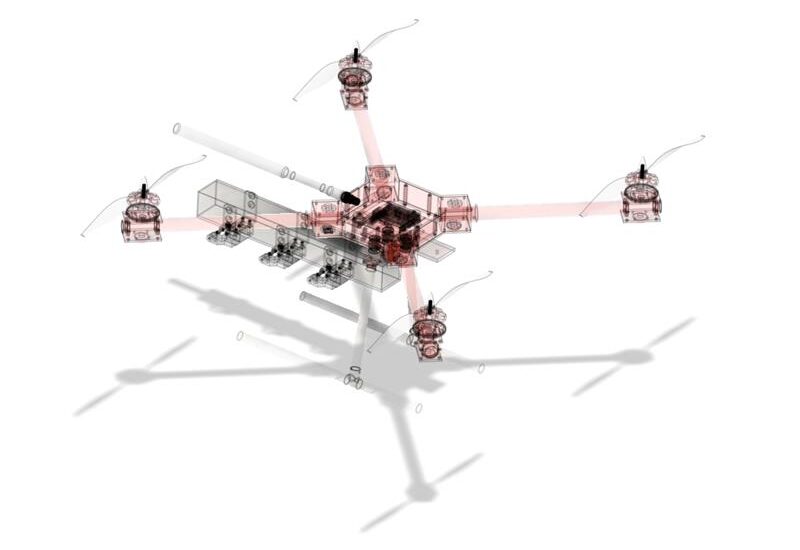

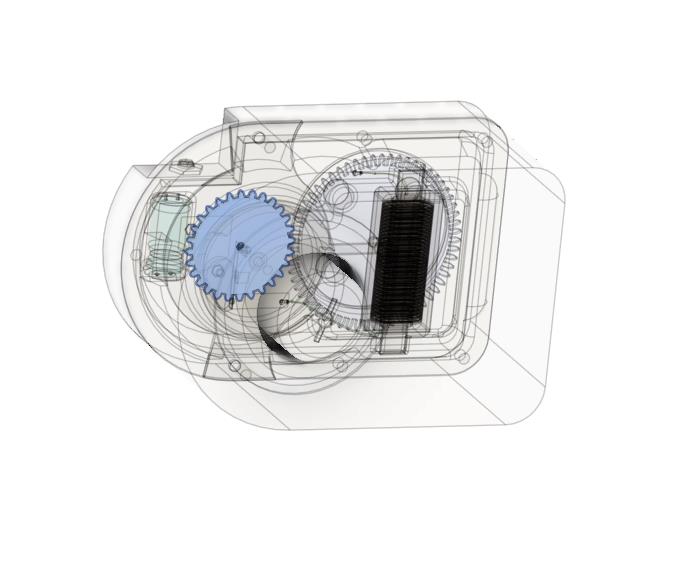

Hardware Overview

- Sensors: Integrated with flight controller and LiDAR (optional)

- Processing Power: Quad Arm Cortex-A76 2,4 4gb

- Optical Flow: Camera module up to 4mpix

- Interface: Web app designed for setup

- Connections: Wireless

How It Works

To integrate GPS-free stabilization into existing drones, several technologies and techniques can be used:

Advanced IMUs

High-grade inertial measurement units (IMUs) with precision accelerometers and gyroscopes provide accurate data on the drone’s position and orientation. Upgrading from standard IMUs enhances stability and navigation in GPS-denied areas.

Optical Flow

These sensors analyze patterns from ground images to detect motion, helping drones maintain stability by referencing visual cues in real-time.

Neural Networks and AI

Machine learning algorithms analyze sensor data to predict and counteract drift, delivering robust navigation performance when conventional systems are unavailable.

Sensor Fusion

Combining data from IMUs, visual systems, barometric sensors, and proximity detectors creates a comprehensive model of the drone’s surroundings, enabling precise control and stabilization in dynamic scenarios.